I still remember the first time I realized my blog posts might be fodder for AI models—that queasy sensation of seeing fragments of my writing reappear in polished robotic prose. It’s odd, isn’t it, that the creators powering our new digital gods rarely get so much as a nod? This post is my take on that creeping unease, the unfairness baked into today’s AI economy, and some unconventional remedies we might—quirks and all—try to set things right. Meanwhile, imagine stumbling across a painting you made in a stranger’s home, except the stranger now runs an art museum powered by your brushstrokes. Welcome to the world of generative AI.

A Secret Ingredient: Human Creativity in AI’s Success

As a blogger, I’ve often spotted snippets of my own posts resurfacing in generative AI output—an uncanny reminder that AI models from OpenAI, Google, and Meta are trained on vast digital repositories, including blogs, podcasts, forums, and digital commons like Wikipedia and the Internet Archive. The irony is clear: while creator work is highly visible, creator rights remain largely invisible. Recent US legal rulings now allow scanning second-hand books for AI training without royalty payments, bypassing traditional fair use doctrine and digital rights creators deserve. Engagement stats—1.3K posts, 10.9K debates—show widespread concern among designers and technologists. As I’ve written,

“AI’s success is built on human creativity, thought, and labor.”Yet, the economic impact of AI rarely rewards those whose content forms its backbone.

Why ‘Do-Not-Train’ Might Be the Next ‘Robots.txt’ (Wild Card Analogy)

Drawing inspiration from the web’s quirky robots.txt protocol—a tool I once tried (and failed) to use to keep my podcast out of search engines—I see the need for a standardized, enforceable do-not-train protocol for AI models. Like robots.txt, this would let digital rights creators opt out or set clear conditions for AI training. Yet, just as web crawlers often ignore privacy signals, most AI bots today disregard consent mechanisms, making AI model accountability a real challenge. Robust digital consent should be a basic right, not a privilege. As I’ve written,

A ‘do-not-train’ protocol would be a first step towards genuine digital self-determination.Sustainable AI practices demand both technical standards and real enforcement—platforms must answer for violations, not just pay lip service to ethics.

Royalty Roulette: Can Collective Rights Organizations Save the Day?

Collective rights organizations like PRS (music), ALCS (authors), and DACS (visual artists) have long ensured creators receive fair royalties. Yet, digital rights creators—bloggers, podcasters, forum posters—lack a similar “Spotify for creators” to negotiate with AI giants like OpenAI or Meta. When I calculated a potential AI dividend for my own blog posts, the result was a laughably small check—proof that individuals have little bargaining power alone. Pooling resources through a digital collective could transform AI compensation models, making payouts meaningful and efficient. Collective rights organizations are the backbone of sustainable creative economies. Crucially, any framework must set aside funds for digital commons like Wikipedia, the Internet Archive, and the Wayback Machine, which fuel AI’s ecosystem but often lack stable support.

Training Dividends and the Digital Sovereign Wealth Fund: AI’s Social Safety Net?

Imagine if every AI company paid a training dividend—a share of profits—to the real content contributors powering their models. Inspired by Norway and Denmark’s oil-based sovereign wealth funds, a digital sovereign wealth fund could channel AI revenues into national or international trusts. These funds would distribute income to registered creators and support vital digital commons like Wikipedia. This approach transforms AI compensation models, ensuring the economic impact of AI benefits many, not just a few investors. As I’ve written, “A digital sovereign wealth fund could turn AI from an extractor into a sustainer of collective prosperity.” Training dividends could cushion creative workers against AI-driven disruption, rewarding those whose work underpins the AI revolution—proportional, not punitive, fueling both innovation and fairness.

Treading the Tightrope: Fairness Without Stifling Innovation (brief tangent)

I firmly believe the world doesn’t need less AI progress—just fairer distribution of its rewards. Over-regulation risks driving innovation to countries less concerned with AI governance; China isn’t waiting for the West to catch up. Simply taxing tech giants hasn’t worked—strategies like the “double Irish with a Dutch sandwich” show how easily AI economic impact can bypass national borders. Instead, we need pragmatic, global AI compensation models that don’t punish progress.

“My intention isn’t to penalize innovation or stunt technology.”Like Universal Basic Income, the goal is to ensure those who fuel AI development risks and breakthroughs share in its benefits. Real fairness means actionable mechanisms—consent protocols, collective rights organizations, and training dividends—that support sustainable AI growth without stifling the very innovation we all depend on.

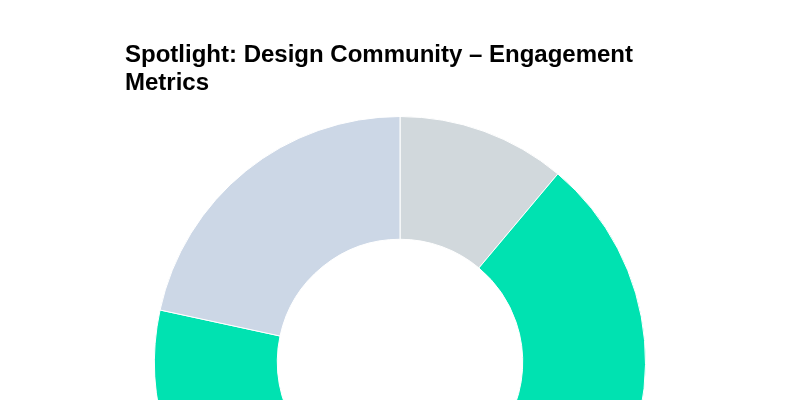

Spotlight: Design Community, Metrics, and the Broader Cultural Conversation

Lively debates about AI impact on jobs, creator rights, and AI industry challenges are everywhere—from LinkedIn and Notion to Clearleft, LDConf, and UXLondon. As a design founder and investor, I’ve seen firsthand how these conversations shape policy. At a London conference, I once dropped an “AI bubble” joke—crickets—but it sparked real reflection. Engagement metrics like 1.8K posts, 10.9K debates, and 3.5K forum threads show this isn’t niche; the community is deeply invested. Topics like “enshittification,” job market pressures, and AI performance benchmarks fuel the dialogue. As I often say,

The AI bubble may burst, but the conversation about creator rights will only grow.Community forums and conferences keep these vital discussions—and calls for AI economic growth—alive.

Conclusion: The Future Is (Partly) Ours to Write

AI owes its momentum to millions of unheralded creators. Imagine if Wikipedia, podcasts, and blogs vanished overnight—what would remain for AI to learn from? Sustainable AI practices and real AI economic growth depend on honoring these digital rights creators. My proposals—consent protocols, collective rights organizations, and training dividends—offer fair use doctrine–aligned, pragmatic fixes that don’t hinder innovation. Progress and fairness are not at odds; both are urgent, both are possible. My hope is that AI’s story becomes one of broad inclusion, not mere consolidation. Picture an annual ‘AI Creators Gala,’ celebrating those whose work powers the future.

The next chapter of AI could be one in which everyone who helped write the first page finally gets a royalty check.Let’s make sure the benefits of AI are shared by all who built its foundations.